Google uppercuts Nvidia in the AI boxing match, will they be able to stay in the ring? 🥊

Either way, competition is only good for us ;)

Google's TPU studded glove they used to land the punch 💥

Apologies in advance for the boxing references, just makes me giggle thinking of CEO's fighting over our approval

Google's got too much money, and this new venture into competing with Nvidia in the hardware race for AI is a good showcase of that, but it's not necessarily a bad thing. Nvidia is an absolute powerhouse of a company nowadays, in no small part thanks to the boom of AI, absolutely every AI company that wants to compete is using an Nvidia GPU to train their next groundbreaking AI models.

It is also Nvidia's GPU's that are in no small part responsible for the massive power usage spike we have seen globally over the past few years

So what is a TPU, and how is Google using this to compete with such a force 🫨?

Well, a Tensor Processing Unit (better known as a TPU) is Google's answer, not to making Cyberpunk Ray Traced playable, but to having AI inference and training much more affordable and efficient!

GPU's although an amazing tool for building and using AI models, are in reality also a Swiss army knife, they're good at a great number of things (gaming being obviously one of them), but that also means they can't be great at all of them. A TPU has been specifically designed and optimized in every way to just focus on training AI's, they're less power hungry for even better performance when it comes to workloads concerning AI.

Here's just a few stats that the TPU's beat GPU's on:

- 1.2x to 1.7x better performance per dollar compared to NVIDIA A100 GPUs

- 30-50% reduction in power consumption

- 20-30% overall cost reduction for similar deployments

So where Nvidia is proposing a tool from their very impressive Swiss army knife to to cut this imaginary AI watermelon, Google is stepping in with a more effective samurai sword to do the job much more efficiently

The Mary Meeker Wake-Up Call 📊

Huh? Who is Mary Meeker? (A very well renowned tech analyst, don't worry I got you on that one)

The timings of this new hardware couldn't come at a better time, AI is booming, and reports from world experts are showing that innovation and usage of AI is only looking to increase in the coming years, meaning there's a lot of need for the right hardware to power such powerful new models that will undoubtedly come with massive power and compute needs.

To pile on this notion even more, last month, the aforementioned legendary tech analyst Mary Meeker dropped her first major report in five years! And it was a massive 340-page deep dive into AI in its current and future state. It was very optimistic about AI, using the word "unprecedented" no fewer than 51 times. And buried in all those charts and graphs was a fascinating revelation about the infrastructure war happening behind the scenes.

While everyone's been obsessing over ChatGPT's meteoric rise to 800 million users, Meeker points out something crucial: data centers now consume 1.5% of global electricity, growing 12% annually, that's 4x faster than total global electricity consumption 👀. The AI boom isn't just about clever algorithms; it's about who can afford to keep the lights on.

And that's where Google's TPU strategy gets fascinating.

The Two-Pronged Master Plan 🎯

Now I've been yapping about how great TPU's are, throwing around statistics like I'm some sort of scientist, but what about the real practical use case? Is anyone even using these TPU's yet?

Well, of course, Google themselves are! They built their models on them, it's one of the main driving reasons (their fat stack of cash aside of course) why they've been able to scale so well and provide such competitive pricing to OpenAI and Anthropic, not only that, but I think we might also be underestimating the scale of Googles AI network, they have put it into almost all of their products (most of which comes for free btw!), take Gemini for instance, as of two years ago every new android phone coming out now comes shipped with their new AI models baked into the phone itself (Again all for free??), even earlier this year, my Samsung watch now uses Gemini assistant, I can generate cursed AI images from my wrist!

This is where Google's strategy gets genuinely brilliant. They're not just building better hardware, they're building an entire ecosystem.

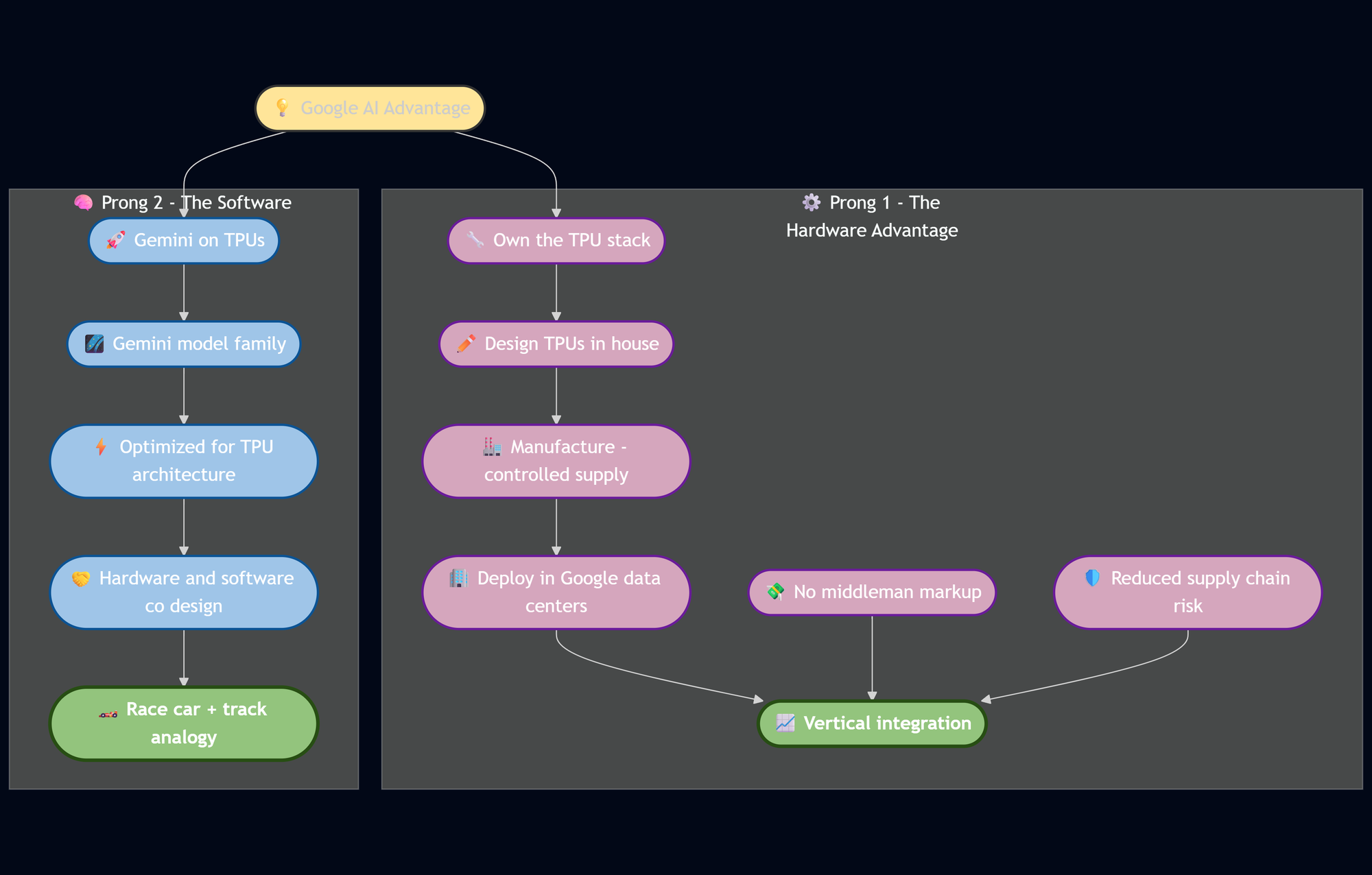

Prong 1: The Hardware Advantage Google controls the entire TPU stack. They design them, manufacture them, and deploy them in their own data centers. No middleman markup, no supply chain drama, just pure vertical integration.

Prong 2: The Software Synergy Their Gemini models aren't just competitive with OpenAI and Anthropic, they're specifically optimized to run on TPU architecture. It's like building both the race car AND the track it runs on!

The result? As Meeker notes, inference costs for AI models have dropped 99% in just two years. But Google's costs are dropping even faster because they control both sides of the equation.

But Wait, What About the Competition? 🤔

Now, I know what you're thinking: "Surely Microsoft and OpenAI won't just roll over?" And you're right! Microsoft is pouring billions into Azure infrastructure, and there's talk of them developing their own custom chips, and with OpenAI being such a close partner I don't doubt they will look to benefit, but in the meantime, OpenAI is getting tempted by the same cost reductions I've been mentioning 😉.

But even with the potential additional competition, Google has a massive head start. They've been iterating on TPUs since 2016, while everyone else is just now waking up to the importance of custom silicon built for AI. It's like they've been training for a marathon while everyone else just realized they need to buy running shoes.

The Energy Elephant in the Room 🐘

Let's talk about the uncomfortable truth that Mary Meeker's report laid bare: the Big Six tech companies invested $212 billion in AI infrastructure in 2024 alone, up 63% year-on-year. That's not just a big number; it's a "small country GDP" kind of number, and with that sort of increase in data-centers, comes a massive increase in power usage, as of right now we already see that data-centers take up 1.5% of global power, which at a glance doesn't seem like much (considering peoples housing globally takes up 20%) but it's the rate of growth that is concerning, having a doubling of this power usage in the coming years is not such a far-fetched idea.

It's even calculated that right now, each prompt for an average ChatGPT model, consumes enough electricity to power 10-15 google searches, and even uses 2-5 liters of water to cool the server down after (these are all estimates of course so take it with a grain of salt), so think about that before you ask ChatGPT how to screw in a light bulb 💡

Google's TPUs, with their superior power efficiency, aren't just saving money. They're attempting to make AI sustainable at scale. It might be the best approach to sustaining the AI race, instead of producing more power, we try to make these AI models do more with less.

What This Means for the Future 🔮

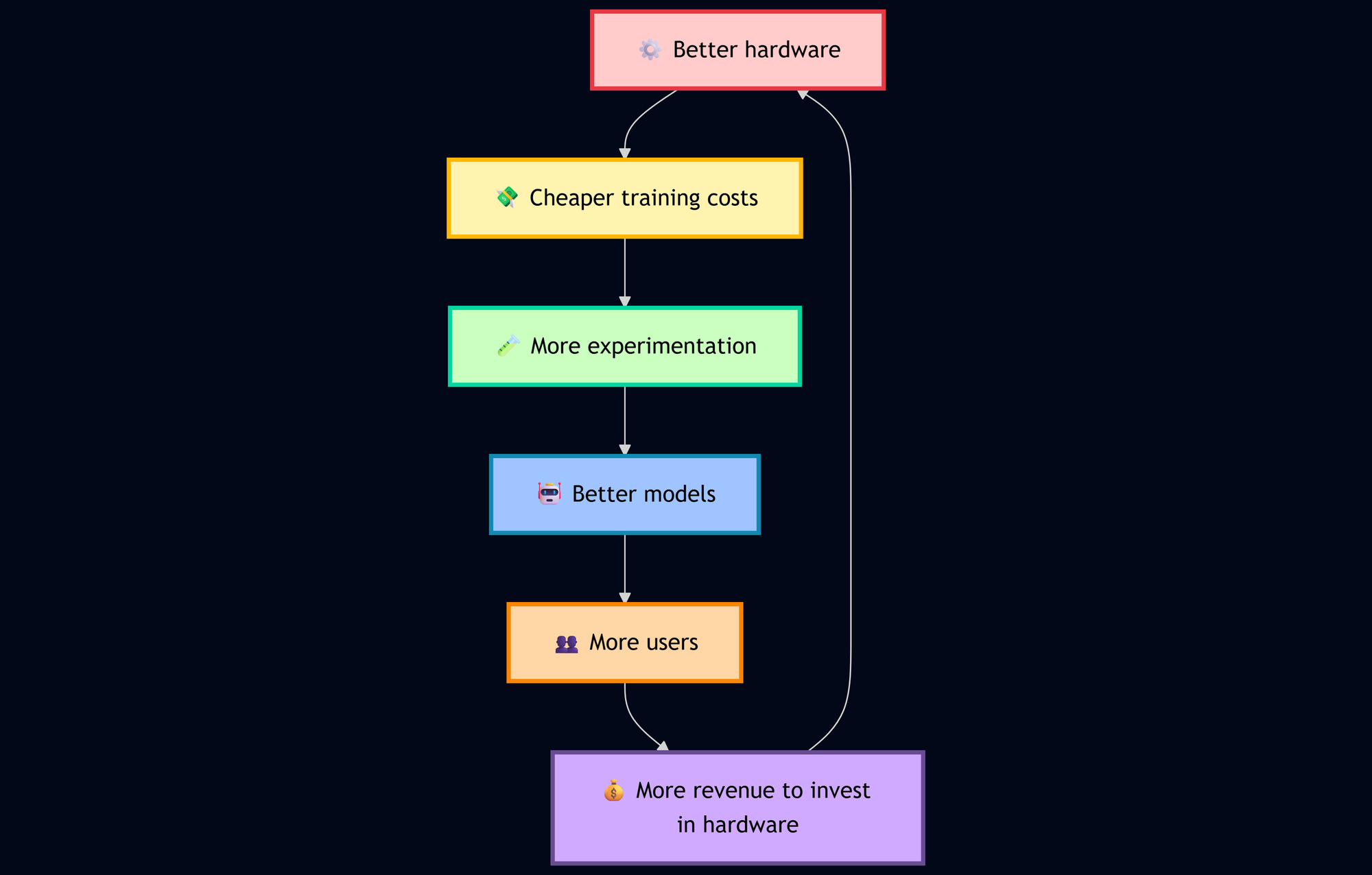

The implications are huge. If Google can maintain their hardware advantage while keeping their AI models competitive, they create a virtuous cycle:

Rinse, repeat, dominate.

The Bottom Line 💡

While everyone's been focused on the AI model wars, Google's been quietly building the infrastructure to win the larger battle. They're not just competing on who has the smartest AI, they're competing on who can afford to keep it running.

My late-night model training sessions taught me that in AI, efficiency isn't just about saving money, it's about what's possible. And with TPUs, Google has made a lot more possible for a lot less cost.

So the next time your $1,200 GPU powering your local model starts screaming all while llama3 is telling you that "pole-vaulting over a moving vehicle is probably not advisable," remember, somewhere in a Google data center, a TPU is doing the same calculation for a fraction of the power, cost, and drama.

The AI revolution isn't just about who has the best algorithms. It's about who can afford to run them. And right now, Google's holding a Royal flush of (tensor processing) cards.

What do you think? Is Google's TPU advantage insurmountable, or will the competition catch up? Drop a comment below, I'd love to hear your take on the infrastructure wars shaping our AI future!